Entry into force of the European AI Regulation: the first questions and answers from the CNIL

For the past year, the CNIL has initiated its action plan to promote AI systems that respects individuals’ rights on their data, and to secure innovative companies in this area in applying the GDPR. With the publication of the AI Act, the CNIL answers your questions about this new legislation.

The European AI Act has just been published in the Official Journal of the European Union and will gradually come into force as of 1 August 2024. Who is concerned? What distinguishes the AI Regulation from the GDPR and how do they complement each other?

Presentation of the AI Act

What does the AI Act provide for?

The European AI Act is the world’s first general legislation on artificial intelligence. It aims to provide a framework for the development, placing on the market and use of artificial intelligence (AI) systems, which may pose risks to health, safety or fundamental rights.

Four levels of risk

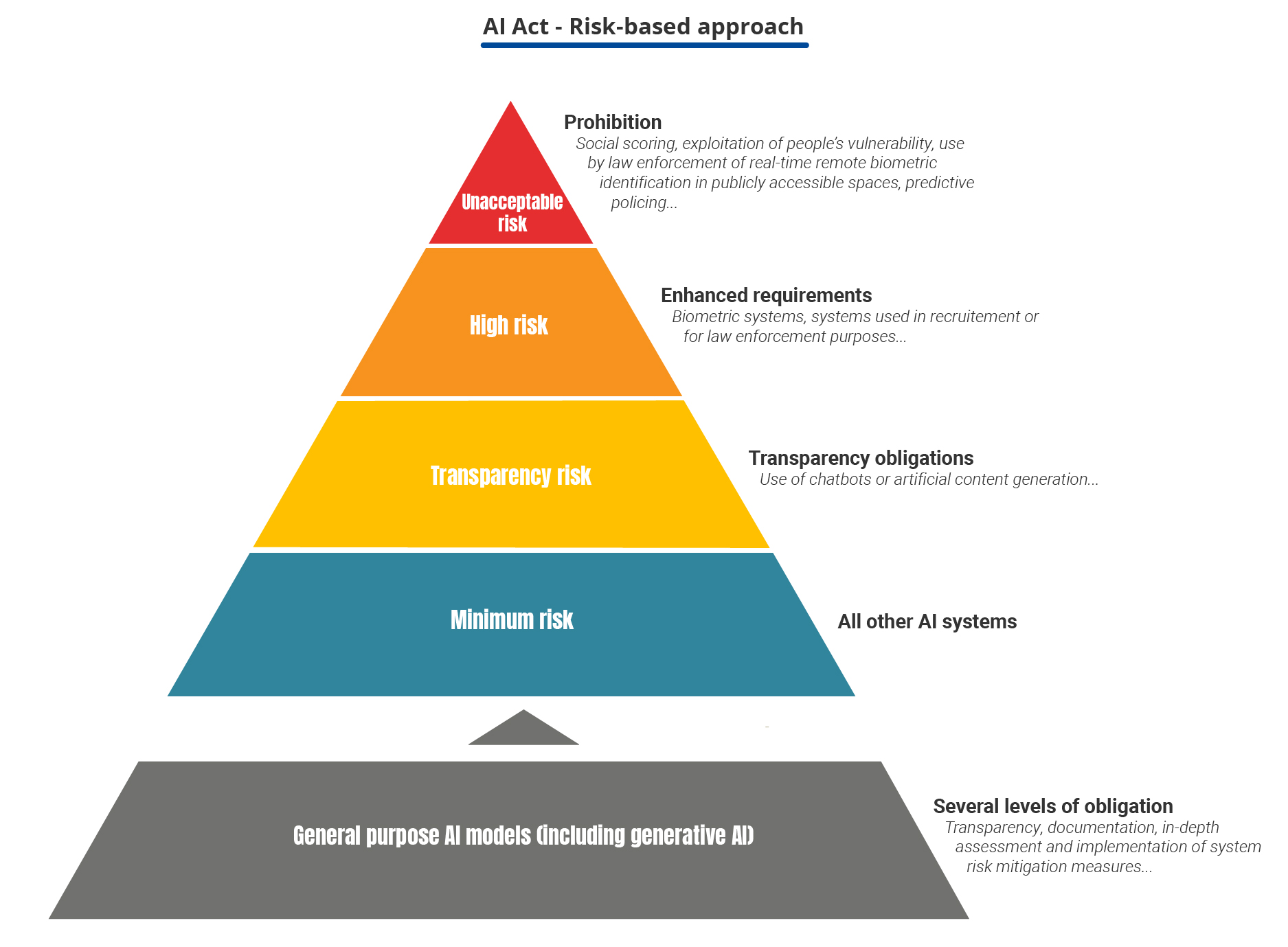

The AI Act follows a risk-based approach by classifying AI systems into four levels:

- Unacceptable risk: the AI Act prohibits a limited set of practices that are deemed contrary to the values and fundamental rights of the EU.

Examples: social scoring, exploitation of people’s vulnerability, use of subliminal techniques, use for law enforcement of real-time remote biometric identification in publicly accessible spaces, predictive policing targeting individuals, recognition of emotions in the workplace and in educational institutions.

- High risk: The AI Act defines AI systems as high-risk where they may affect the safety of individuals or their fundamental rights, which justifies their development being subject to enhanced requirements (conformity assessments, technical documentation, risk management mechanisms). These systems are listed in annex I for systems embedded in products that are already subject to market surveillance (medical devices, toys, vehicles, etc.) and in annex III for systems used in eight specific areas.

Examples: biometric systems, systems used in recruitment, or for law enforcement purposes.

- Specific transparency risk: the AI Act imposes specific transparency obligations on AI systems, in particular where there is a clear risk of manipulation.

Examples: use of chatbots or systems generating content.

- Minimal risk: for all other AI systems, the AI Act does not include a specific obligation. These are the vast majority of AI systems currently in use in the EU or likely to be used in the EU according to the European Commission.

General purpose AI models

Moreover, the AI Act also provides a framework for a new category of so-called general-purpose AI models, in particular in the field of generative AI. These models are defined by their ability to serve a large number of tasks (such as large language models( LLMs, like the ones provided by Mistral AI or OpenAI companies), making them difficult to classify them in the previous categories.

For this category, the AI Act provides for several levels of obligations, ranging from minimum transparency and documentation measures (article 53) to an in-depth assessment and the implementation of systemic risk mitigation measures that some of these models might entail, in particular because of their power: risks of major accidents, misuse to launch cyberattacks, the spread of harmful biases (e.g. ethnicity or gender) and discriminatory effects against certain persons, etc. (see in particular recital 110).

Who will monitor the application of the AI Act in the EU and in France?

The AI Act provides for a two-level governance structure.

Governance at European level

European cooperation, aimed at ensuring consistent application of the AI Act, is built around the European AI Board (articles 65 and 66). This body brings together high-level representatives from each Member State and, as an observer, the European Data Protection Supervisor (the CNIL’s counterpart to the European institutions also called EDPS). The AI Office (a newly created institution of the European Commission) participates but without the right to vote.

In addition, the AI Act introduces two other bodies intended to inform the AI Board in its choices:

- an advisory forum (a multi-stakeholder body which may advise the European AI Board and the European Commission in the performance of their tasks); and

- a scientific panel of independent experts (high-level scientists who will support the AI Office in its task of supervising general-purpose AI models, as well as national authorities for their law enforcement activities).

In addition, general purpose AI models are supervised by the AI Office, as are AI systems based on those models when the system and model are developed by the same provider. Competence will be shared between the AI Office and the national market surveillance authorities for high-risk general purpose AI systems.

Governance at national level

The AI Act provides for the designation of one or more competent authorities to assume the role of market surveillance authority. Each Member State must organise the governance structure which it considers most likely to enable the proper application of the AI Act within one year. If several competent authorities are designated within the same Member State, one of them must act as the national contact point, in order to facilitate exchanges with the European Commission, the counterpart authorities and the general public.

The AI Act does not rule on the nature of that authority or authorities, with the exception of:

- the European Data Protection Supervisor (EDPS), who is expressly designated as the supervisory authority for the institutions, bodies, offices or agencies of the European Union (with the exception of the Court of Justice of the European Union acting in the exercise of its judicial functions);

- data protection authorities that are mentioned in relation to the market surveillance role of a large number of high-risk AI systems (article 74);

- finally, high-risk AI systems already subject to sector-specific regulation (listed in annex I) will remain regulated by the authorities that control them today (e.g. the French National Agency for the Safety of Medicines and Health Products (ANSM) for medical devices).

| AI Act | GDPR | |

|---|---|---|

| Authorities in charge |

For AI systems: one or more competent authorities responsible for market surveillance and designation of notified bodies (at the choice of the Member States) For general-purpose AI models: AI Office in the European Commission |

Data Protection Authority of each Member State |

| European cooperation |

European AI Board Administrative Cooperation Group (ADCO) |

European Data Protection Board (EDPB) One stop shop and consistency mechanism |

| Internal referent to the organizations | No specifically identified person | Data Protection Officer |

How will the CNIL take AI Act into account?

The CNIL is responsible for ensuring compliance with the GDPR, which is a regulation based on general principles applicable to all IT systems. It therefore also applies to personal data processed for or by AI systems, including when they are subject to the requirements of the AI Act.

In practice, the CNIL plans to rely on these requirements to guide and support stakeholders in compliance with the AI Act, but also the GDPR by proposing an integrated vision of the applicable rules. The CNIL considers that this new regulation must allow actors to better understand their obligations when developing or deploying AI.

However, the AI Act also provides for certain prohibited practices, some of which have already been sanctioned by data protection authorities. For example, the CNIL had the opportunity to penalize the practice relating to the creation or development of facial recognition databases through the untargeted scraping of facial images (from the internet or CCTV footage).

As detailed below, the CNIL remains fully competent to apply the GDPR, for example to providers of general-purpose AI models or systems whose main establishment is in France, in particular when they are not subject to substantive requirements under the AI Act (France for Mistral AI or LightOn, Ireland for ChatGPT/OpenAI or Google/Gemini).

For the past year, the CNIL has launched an action plan to secure innovative AI companies in their application of the GDPR and promote AI that respects individuals’ rights over their data. This includes the publication and submission to public consultation of how-to-sheets that clarify how to apply the GDPR to AI and provide extensive advice. The CNIL engages with companies in the AI sector and supports some of them in their innovative projects.

When does the AI Act come into effect?

Published in the Official Journal on 12 July 2024, the AI Act will enter into force 20 days later, i.e. on 1 August 2024. The entry into application will then take place in stages:

- 2 February 2025 (6 months after entry into force):

- Prohibitions on AI systems presenting unacceptable risks.

- 2 August 2025 (12 months after entry into force):

- Application of the rules for general-purpose AI models.

- Designation of competent authorities at Member State level.

- 2 August 2026 (24 months after entry into force):

- All provisions of the AI Act become applicable, in particular the application of the rules on high-risk AI systems in annex III (AI systems in the fields of biometrics, critical infrastructure, education, employment, access to essential private and public services, law enforcement, migration and border control management, democratic processes and the administration of justice).

- Implementation by Member State authorities of at least one AI regulatory sandbox.

- 2 August 2027 (36 months after entry into force)

- Application of the rules on high-risk AI systems listed in annex I (toys, radio equipment, in vitro diagnostic medical devices, civil aviation safety, agricultural vehicles, etc.).

Moreover, the entry into application will be based on “harmonised standards” at European level which must define precisely the requirements applicable to the AI systems concerned. The European Commission has therefore commissioned CEN/CENELEC (European Committee for Electrotechnical Standardization) to draft ten standards. The CNIL has been actively involved in their development since January 2024.

How do the GDPR and the AI Act fit together?

Does the AI Act replace the requirements of the GDPR?

No.

The AI Act does not replace the requirements of the GDPR. It is very clear on that point. On the contrary, it aims to complement them by laying down the conditions required to develop and deploy trusted AI systems.

Specifically, the GDPR applies to all processing of personal data, i.e. both:

- During the development phase of an AI system: a provider of an AI system or model within the meaning of the AI Act will most often be considered a controller under the GDPR;

- And during the use (or deployment) phase of an AI system: a deployer or user of an AI system within the meaning of the AI Act that processes personal data will most often be responsible for it under the GDPR.

On the other hand, the AI Act lays down specific requirements, which can contribute significantly to the compliance with the requirements of the GDPR (see below).

AI Act / GDPR: how do I know which regulation(s) apply to me?

Since the AI Act applies exclusively to AI systems and models and the GDPR applies to any processing of personal data, there are four possible situations:

- only the AI Act applies: this will be the case for the provider of a high-risk AI system that does not require the processing of personal data, neither for its development nor for its deployment,

Example: an AI system applied to power plant management

- only the GDPR applies: this will be the case for the processing of personal data used to develop or use an AI system that is not subject to the AI Act,

Example: an AI system used to assign interests for advertising purposes, or a system developed exclusively for scientific research purposes

- both apply: this will be the case where a high-risk AI system requires personal data for its development and/or its deployment,

Example: an AI system used for automatic resume sorting

- or neither applies: this will be the case for an AI system with minimal risk that does not involve personal data processing.

Example: an AI system used for simulation in a video game

| Applicable AI Act rules | Application of the GDPR |

|---|---|

| Prohibited AI practices | Systematically, all prohibited practices involving the processing of personal data. |

| General-purpose AI model (including those with systemic risk) | Almost systematically, general-purpose AI models are most often based on the use of personal data for their training |

| High-risk AI systems | In many cases (with notable exceptions such as AI systems used in critical infrastructure, agricultural vehicles, lifts, etc.) |

| AI systems with specific transparency risk | In some cases, in particular systems intended to interact directly with natural persons |

How does the AI Act impact the GDPR?

The AI Act and the GDPR do not regulate the same objects and do not require the same approach.

However, compliance with the former facilitates, or even prepares, compliance with the latter: for example, the compliance of the AI system with the GDPR is included in the EU declaration of conformity required by the AI Act (annex V).

In addition, the AI Act also resolves some tensions between certain GDPR requirements and its own. To this end, it extends and takes over from the GDPR on certain well-defined points:

- the AI Act replaces certain GDPR rules for the use by law enforcement authorities of real-time remote biometric identification in publicly accessible spaces, which it makes very exceptionally possible under certain conditions (article 5);

- exceptionally, it allows sensitive data (within the meaning of article 9 of the GDPR) to be processed to detect and correct potential biases that could cause harm, if strictly necessary and subject to appropriate safeguards (article 10);

- it also allows the re-use of personal data, in particular sensitive data, in the context of the AI regulatory sandboxes it establishes. These sandboxes aim to facilitate the development of systems of significant public interest (such as the improvement of the health system) and are under the supervision of a dedicated authority which must consult the CNIL beforehand, and verify compliance with a number of requirements (article 59).

Transparency and documentation: how to link these AI Act requirements with those of the GDPR?

The AI Act and the GDPR sometimes approach similar notions from a different perspective.

This is the case, for example, with the principle of transparency and documentation requirements, which demonstrate the complementarity of these regulations.

Transparency measures

The GDPR provides for transparency obligations, which essentially consist of informing the persons whose data is processed of how it is processed (why, by whom, how, how long, etc.). This applies to both data processing for the development of an AI system and for the use of an AI system on or by an individual (which would thus give rise to the processing of personal data).

The AI Act provides for very similar transparency measures, for example on data used to train general-purpose AI models (the list of which is to be made publicly available under its article 53) or on systems intended to interact with individuals (article 50).

Documentation requirements

The AI Act also provides for the provision of technical documentation and information by providers of high-risk AI systems (articles 11 and 13) or general-purpose AI models (article 53) to their deployers and users. The documentation shall include details of the testing and conformity assessment procedures carried out.

The AI Act also requires certain deployers of high-risk AI systems to conduct a fundamental rights impact assessment, FRIA (article 27, which mainly concerns public actors and bodies entrusted with public service tasks).

The obligation to conduct a data protection impact assessment (DPIA) under the GDPR perfectly complements these requirements of the AI Act. Indeed, the DPIA will be presumed to be required from provider and deployer of high-risk AI systems, but above all it can usefully feed on the documentation required by the AI Act (article 26).

The AI Act also provides that the deployer may rely on the DPIA already carried out by the provider to conduct its fundamental rights impact assessment (article 27). Since the common objective is to enable all necessary measures to be taken to limit the risks to the health, safety and fundamental rights of persons likely to be affected by the AI system, these analyses can even be brought together in the form of a single document to avoid overly burdensome formalism.

These are only examples of complementary measures between the two legislations, but the CNIL is actively involved in the work of the European Data Protection Board (EDPB) on the interplay between the rules applicable to the protection of personal data and the AI Act, currently under way. This work is intended to provide more clarity on the points of articulation, while consolidating a harmonised interpretation between the CNIL and its European counterparts.

To sum up: What are the differences between the AI Act requirements and the GDPR?

The AI Act and the GDPR have strong similarities and complementarities, but their scopes and approaches differ.

Summary table of the specificities of the AI Act and the GDPR

| AI act | GDPR | |

|---|---|---|

| Scope | The development, placing on the market or deployment of AI systems and models |

Any processing of personal data regardless of the technical devices used (including processing to develop an AI model or system (training data), and processing performed using an AI system) |

| Targeted actors | Mainly providers and deployers of AI systems (to a lesser extent importers, distributors and authorized representatives) |

Data controllers and processors (including providers and deployers subject to the AI Act) |

| Approach | Risk-based approach to health, safety or fundamental rights, including through product safety and market surveillance with regard to AI systems and models | Principle-based approach, risk assessment and accountability |

| Main mode of conformity assessment (non-exhaustive) | Internal or third-party conformity assessment, including through a risk management system and against harmonised standards |

Accountability principle (internal documentation) and compliance tools (certification, code of conduct) |

| Main applicable sanctions |

Product recall or market withdrawal Administrative fines of up to €35m or 7% of the global annual turnover |

Formal notice (which may require the processing operation to be brought into conformity, to be limited temporarily or permanently, including on a periodic penalty payment) Administrative fines of up to €20 million or 4% of global annual turnover |